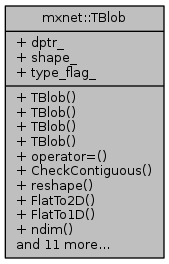

tensor blob class that can be used to hold tensor of any dimension, any device and any data type, This is a weak type that can be used to transfer data through interface TBlob itself do not involve any arithmentic operations, but it can be converted to tensor of fixed dimension for further operations

More...

|

| | TBlob (void) |

| | default constructor, default copy assign will work More...

|

| |

| template<typename DType > |

| | TBlob (DType *dptr, const TShape &shape, int dev_mask, int dev_id=-1) |

| | constructor that construct TBlob from contiguous memory More...

|

| |

| | TBlob (void *dptr, const TShape &shape, int dev_mask, int type_flag, int dev_id=-1) |

| | constructor that construct TBlob from contiguous memory More...

|

| |

| template<typename Device , int dim, typename DType > |

| | TBlob (const mshadow::Tensor< Device, dim, DType > &src) |

| | constructor from tensor More...

|

| |

| template<typename Device , int dim, typename DType > |

| TBlob & | operator= (const mshadow::Tensor< Device, dim, DType > &src) |

| | assignment from tensor More...

|

| |

| bool | CheckContiguous (void) const |

| |

| TBlob | reshape (const TShape &shape) const |

| | reshape to shape More...

|

| |

| template<typename Device , typename DType > |

| mshadow::Tensor< Device, 2, DType > | FlatTo2D (mshadow::Stream< Device > *stream=NULL) const |

| | flatten the tensor to 2 dimension, collapse the higher dimensions together More...

|

| |

| template<typename Device , typename DType > |

| mshadow::Tensor< Device, 1, DType > | FlatTo1D (mshadow::Stream< Device > *stream=NULL) const |

| | flatten the tensor to 1 dimension, collapse all the dimensions together. More...

|

| |

| int | ndim (void) const |

| | return number of dimension of the tensor inside More...

|

| |

| index_t | size (index_t idx) const |

| | return size of i-th dimension, start counting from highest dimension More...

|

| |

| index_t | Size (void) const |

| | total number of elements in the tensor More...

|

| |

| template<typename DType > |

| DType * | dptr () const |

| | get pointer in dtype More...

|

| |

| int | dev_mask () const |

| | device mask of the corresponding device More...

|

| |

| int | dev_id () const |

| | device index of the corresponding device More...

|

| |

| const DLTensor & | dltensor () const |

| | return the corresponding DLTensor More...

|

| |

| template<typename Device , int dim, typename DType > |

| mshadow::Tensor< Device, dim, DType > | get (mshadow::Stream< Device > *stream=NULL) const |

| | fetch the tensor, with respect to specific dimension if dim do not match the stored dimension, an error will be issued More...

|

| |

| template<typename Device , int dim, typename DType > |

| mshadow::Tensor< Device, dim, DType > | get_with_shape (const mshadow::Shape< dim > &shape, mshadow::Stream< Device > *stream=NULL) const |

| | fetch a tensor in given shape If size do not match the stored size, an error will be issued More...

|

| |

| template<typename Device , typename DType > |

| mshadow::Tensor< Device, 3, DType > | FlatTo3D (int axis, mshadow::Stream< Device > *stream=NULL) const |

| | flatten the tensor to 3 dimension, collapse the dimension before and after specified axis. More...

|

| |

| template<typename Device , typename DType > |

| mshadow::Tensor< Device, 3, DType > | FlatTo3D (int axis_begin, int axis_end, mshadow::Stream< Device > *stream=NULL) const |

| | flatten the tensor to 3 dimension, collapse the dimension: [0, axis_begin), [axis_begin, axis_end], (axis_end, ndim). More...

|

| |

| template<typename Device , int dim, typename DType > |

| mshadow::Tensor< Device, dim, DType > | FlatToKD (mshadow::Stream< Device > *stream=NULL) const |

| | flatten the tensor to specified number of dimensions, collapse the highest dimensions or pad with higher dimensions More...

|

| |

tensor blob class that can be used to hold tensor of any dimension, any device and any data type, This is a weak type that can be used to transfer data through interface TBlob itself do not involve any arithmentic operations, but it can be converted to tensor of fixed dimension for further operations

Like tensor, this data structure is like a pointer class and do not implicit allocated, de-allocate space. This data structure can be helpful to hold tensors of different dimensions and wait for further processing

1.8.11

1.8.11